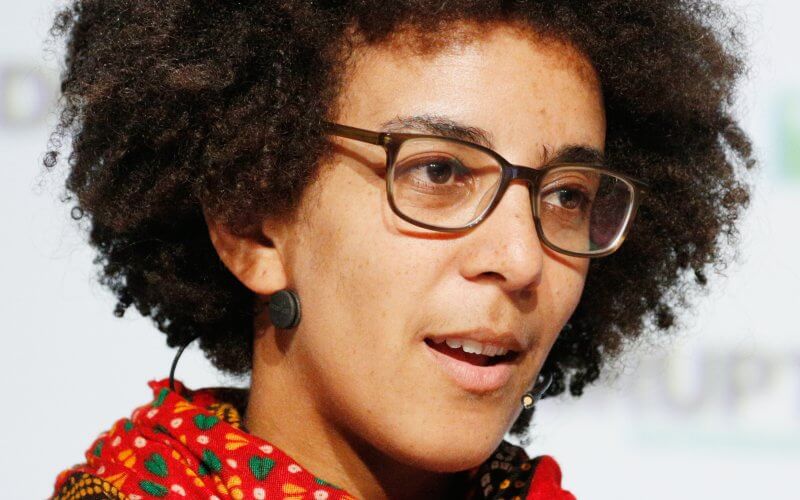

Dr Timnit Gebru is the founder and Executive Director of the Distributed AI Research Institute (DAIR). She is a researcher in artificial intelligence, working to reduce the potential negative impacts of AI. Until her recent firing from Google, which ignited a labor movement resulting in the first union to be formed by tech workers at Google, Timnit co-led the Ethical Artificial Intelligence research team. Before her work at Google, she did a postdoc at Microsoft Research, New York City, in the FATE (Fairness Transparency Accountability and Ethics in AI) group, where she worked on algorithmic bias and the ethical implications underlying projects aiming to gain insights from data.

Born in Addis Ababa, Ethiopia, Dr. Timnit Gebru’s upbringing was defined by tribulations. With her father’s early demise, she was raised by her economist mother. Escaping the conflict-driven turmoil of the Eritrean-Ethiopian War, she secured political asylum in the US, an experience she succinctly characterized as ‘miserable.’

Settling in Somerville, Massachusetts, her academic path was marred by racial prejudice. “I started to experience racially based discrimination, with some teachers refusing to allow me to take certain Advanced Placement courses, despite being a high-achiever,” she recounted.

Her trajectory toward becoming a proponent of technology ethics was spurred by an encounter involving law enforcement. “A friend of mine, a Black woman, was assaulted in a bar, and Dr. Gebru called the police to report it. She says that instead of filing the assault report, her friend was arrested and remanded to a cell,” she recollected. This incident galvanized her commitment to addressing systemic racism and disparities, culminating in her fervent dedication to reshaping the technology landscape.

She went on to earn both a Bachelor’s and Master’s degree in electrical engineering concurrently at Stanford University. During this time, she contributed to designing circuits and signal processing algorithms for Apple products, including the first iPad. She subsequently gained admission to Stanford University, attaining her Bachelor of Science and Master of Science degrees in electrical engineering. In 2017, Dr. Gebru earned her Ph.D. in computer vision under the guidance of Fei-Fei Li.

Throughout her doctoral pursuit, Dr. Gebru contributed substantially to the discourse surrounding artificial intelligence. Her unpublished paper addressed concerns about the trajectory of AI, highlighting the imperative of diversity within the field. Drawing from personal encounters with law enforcement and insights gleaned from a ProPublica investigation on predictive policing, she examined the propagation of human biases within machine learning models. Dr. Gebru’s critique extended to the gender dynamics prevalent in the industry, the issue of conference harassment, and the unexamined veneration of prominent figures within the field.

In 2013, Dr. Gebru became a part of Stanford’s research landscape by joining Fei-Fei Li’s lab. By employing data mining techniques on publicly accessible images, Dr. Gebru focused on examining funds allocated by governmental and non-governmental entities for community information gathering. Utilizing a fusion of deep learning and Google Street View data, she successfully estimated the socioeconomic attributes of United States neighborhoods, showcasing qualities such as voting patterns, income, race, and education that can be inferred from vehicle observations. Dr. Gebru discovered that an overabundance of pickup trucks, as opposed to sedans, correlated with communities more likely to vote for the Republican party. This extensive analysis involved over 15 million images from the most populous US cities.

In subsequent years, Dr. Gebru significantly fostered inclusivity and diversity within the AI domain. Her presence at the Neural Information Processing Systems (NIPS) conference in 2015 revealed the underrepresentation of Black researchers, propelling her to co-found Black in AI with Rediet Abebe. A subsequent venture led her to Microsoft’s Fairness, Accountability, Transparency, and Ethics in AI (FATE) lab in 2017. Here, she explored biases in AI systems and their remedies through team diversity. Dr. Gebru coauthored the influential research paper “Gender Shades,” uncovering biases in facial recognition technology.

Transitioning to Google in 2018, Dr. Gebru assumed a pivotal role in addressing AI ethics alongside Margaret Mitchell—their collaborative effort aimed to improve the societal benefits achievable through technology. In 2019, she joined other AI researchers in advocating against Amazon’s biased facial recognition technology being sold to law enforcement. Dr. Gebru expressed reservations about using facial recognition for law enforcement and security purposes, citing its current risks.

Dr. Gebru was among six coauthors of the research paper “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?.”The report delved into the risks associated with extensive language models, encompassing environmental and financial implications, inscrutability leading to obscure biases, the models’ inability to grasp underlying concepts, and the potential for deceptive use. In December 2020, Google chose to terminate her employment, citing her paper’s alleged oversight of relevant research as the reason.

However, undeterred by this hurdle, in June 2021, Dr. Gebru revealed plans to establish the Distributed Artificial Intelligence Research Institute (DAIR), modeled after her Google Ethical AI work and Black in AI involvement. Launched on December 2, 2021, DAIR is set to explore AI’s impact on marginalized groups, particularly in Africa and among African immigrants in the US. She says, “There’s a real danger of systematizing our societal discrimination [through AI technologies]. What I think we need to do — as we’re moving into this world full of invisible algorithms everywhere — is to be very explicit, or have a disclaimer, about what our error rates are like.”

One early project will employ AI to analyze South African township satellite imagery, investigating apartheid’s enduring effects. The analysis will likely uncover patterns of development, access to resources, and socio-economic conditions that might reflect the historical inequalities resulting from apartheid policies. Through this project, Gebru’s goal is to shed light on the long-lasting effects of apartheid and contribute to the larger discourse on social justice and equality. The utilization of AI to analyze these images could reveal insights that might have been otherwise difficult to discern, offering valuable information for policy-makers, researchers, and advocates working towards addressing historical injustices and promoting equitable development in these communities.

Conclusively, in her perspective on AI and regulation, Dr. Gebru says, “We’re seeing a kind of a Wild West situation with AI and regulation right now. The scale at which businesses adopt AI technologies isn’t matched by clear guidelines to regulate algorithms and help researchers avoid the pitfalls of bias in datasets. We need to advocate for a better system of checks and balances to test AI for bias and fairness and help businesses determine whether certain use cases are appropriate for this technology.”